Detection of Mental Health Disorder from Social Media Data

DOI:

https://doi.org/10.26438/ijcse/v13i11.9098Keywords:

BERT, Explainable AI, Interpretable Deep Learning, Mental Health Detection, Social Media AnalyticsAbstract

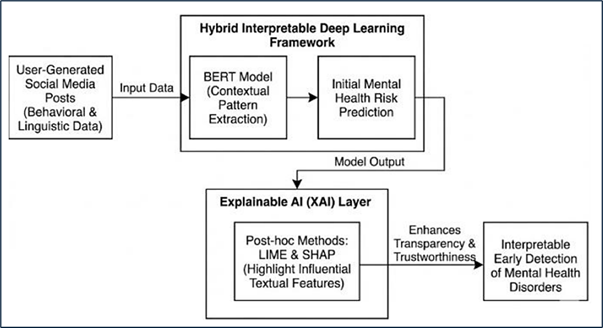

The growing prevalence of mental health disorders has underscored the urgent need for early and scalable detection methods. Social media platforms provide a rich source of behavioral and linguistic data that can serve as indicators of mental health status. This study proposes an interpretable deep learning framework for the early detection of mental health disorders using a hybrid approach that integrates Bidirectional Encoder Representations from Transformers (BERT) with Explainable Artificial Intelligence (XAI) techniques. The model leverages BERT’s contextual language understanding capabilities to extract nuanced emotional, cognitive, and linguistic patterns from user-generated social media posts. To enhance transparency and trustworthiness, post-hoc explainability methods such as LIME and SHAP are applied to interpret the model’s predictions and highlight influential textual features contributing to mental health risk assessment. Experimental evaluations on benchmark datasets demonstrate that the proposed hybrid model achieves superior accuracy and interpretability compared to traditional deep learning baselines. The findings suggest that combining transformer-based models with explainable AI can provide reliable and ethically responsible tools for early intervention and mental health monitoring in digital environments.

References

[1] M. A. Mansoor and K. H. Ansari, “Early detection of mental health crises through artificial-intelligence-powered social media analysis: A prospective observational study,” J. Pers. Med., Vol.14, No.9, pp.958–958, 2024.

[2] M. E. Aragón, A. P. López-Monroy, M. Montes-Y-Gómez, and D. E. Losada, “Adapting language models for mental health analysis on social media,” Artif. Intell. Med., pp.103217–103217, 2025.

[3] K. Lee et al., “Using digital phenotyping to understand health-related outcomes: A scoping review,” Int. J. Med. Inform., Vol.174, pp.105061–105061, 2023.

[4] W. M. Campbell, C. K. Dagli, and C. J. Weinstein, “Social network analysis with content and graphs,” IEEE Signal Process. Mag., Vol.20, No.1, pp.62–81, 2013.

[5] GeeksforGeeks, “How to generate word embedding using BERT?,” GeeksforGeeks, 2023.

[6] A. Kumar, J. Kumari, and J. Pradhan, “Explainable deep learning for mental health detection from English and Arabic social media posts,” ACM Trans. Asian Low-Resour. Lang. Inf. Process., 2023.

[7] S. F. Kwakye, “Towards transparent and interpretable predictions of student performance using explainable AI,” UEL Research Repository, 2025.

[8] V. Hassija et al., “Interpreting black-box models: A review on explainable artificial intelligence,” Cogn. Comput., Vol.16, No.1, pp.45–74, 2023.

[9] V. Chakkarwar, S. Tamane, and A. Thombre, “A review on BERT and its implementation in various NLP tasks,” Atlantis Press, 2023.

[10] S. Afroogh et al., “Trust in AI: progress, challenges, and future directions,” Humanit. Soc. Sci. Commun., Vol.11, No.1, pp.1–30, 2024.

[11] M. G. Alex and S. J. Peter, “A hybrid approach for integrating deep learning and explainable AI for augmented fake news detection,” J. Comput. Anal. Appl., Vol.33, No.6, 2024.

[12] A. M. Alhuwaydi, “Exploring the role of artificial intelligence in mental healthcare: current trends and future directions – a narrative review for a comprehensive insight,” Risk Manag. Healthc. Policy, pp.1339–1348, 2024.

[13] J. Batista, A. Mesquita, and G. Carnaz, “Generative AI and higher education: Trends, challenges, and future directions from a systematic literature review,” Information, Vol.15, No.11, p. 676, 2024.

[14] T. A. D’Antonoli et al., “Large language models in radiology: fundamentals, applications, ethical considerations, risks, and future directions,” Diagn. Interv. Radiol., Vol.30, No.2, p. 80, 2024.

[15] S. S. Dhanda et al., “Advancement in public health through machine learning: a narrative review of opportunities and ethical considerations,” J. Big Data, Vol.12, No.1, pp.1–58, 2025.

[16] Frontiers in Psychiatry, “Personalized prediction and intervention for adolescent mental health: multimodal temporal modeling using transformer,” Frontiers in Psychiatry, 2025.

[17] P. Garg, M. K. Sharma, and P. Kumar, “Improving hate speech classification through ensemble learning and explainable AI techniques,” Arab. J. Sci. Eng., Vol.50, No.15, pp.11631–11644, 2025.

[18] M. R. Haque et al., “MMFformer: Multimodal fusion transformer network for depression detection,” arXiv, 2025.

[19] E. Hashmi et al., “Advancing fake news detection: Hybrid deep learning with fastText and explainable AI,” IEEE Access, Vol.12, pp.44462–44480, 2024.

[20] A. Hedhili and I. Bouallagui, “Hybrid approach to explain BERT model: sentiment analysis case,” in Proc. ICAART, pp.251–259, 2024.

[21] J. Jiao, S. Afroogh, Y. Xu, and C. Phillips, “Navigating LLM ethics: Advancements, challenges, and future directions,” arXiv preprint arXiv:2406.18841, 2024.

[22] B. D. Ram et al., “A hybrid approach to fake news detection using FastText and explainable AI,” in Proc. IET Conf. CP920, Vol.2025, No.7, pp.1679–1684, May 2025.

[23] RUDA-2025, “Depression severity detection using pre-trained transformers on social media data (Standard Urdu / Code-mixed Roman Urdu),” AI, Vol.6, No.8, p. 191, 2025.

[24] S. K. Swarnkar and Y. K. Rathore, “Explainable AI for mental health diagnosis,” IJFIEST, 2025.

[25] S. Wu, X. Huang, and D. Lu, “Psychological health knowledge-enhanced LLM-based social network crisis intervention text transfer recognition method,” arXiv, 2025.

[26] A. Yadav and S. P. Mc, “Classifying AI vs. human content: Integrating BERT and linguistic features for enhanced classification,” Oper. Res. Forum, Vol.6, No.2, p. 77, Jun. 2025.

[27] M. Zhuang, Y. Xiong, and J. Li, “Postgraduate psychological stress detection from social media using BERT-fused model,” PLOS ONE, 2024.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution 4.0 International License.

Authors contributing to this journal agree to publish their articles under the Creative Commons Attribution 4.0 International License, allowing third parties to share their work (copy, distribute, transmit) and to adapt it, under the condition that the authors are given credit and that in the event of reuse or distribution, the terms of this license are made clear.