Autonomous Underwater Navigation Utilizing Computational Fluid Dynamics Guided Reinforcement Learning

DOI:

https://doi.org/10.26438/ijcse/v13i7.4150Keywords:

Autonomous Underwater Vehicle, Computational Fluid Dynamic, Reinforcement Learning, Coral Reef Monitoring, Soft Actor-Critic,, Ocean Robotics,, Pressure Sensors, Environmental MonitoringAbstract

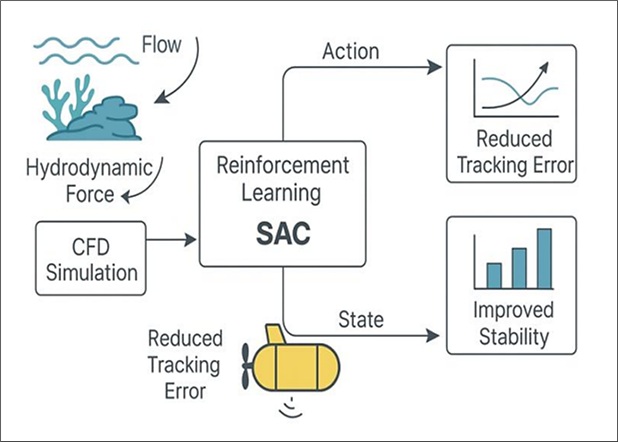

Coral reefs, home to over 25% of marine biodiversity, have declined by over 50% in the last 30 years due to climate change and pollution. They provide habitat and shelter for over 4,000 species of fish and protect coastal communities by reducing wave energy by 97%. Traditional monitoring methods like diver-led surveys, satellite imaging, and pre-programmed AUVs struggle with efficiency and adaptability in turbulent conditions, often resulting in incomplete data collection. This project evaluates whether utilizing computational fluid dynamics-guided (CFD) reinforcement learning agents (RL) can enhance AUV navigation in such environments, focusing on the effectiveness of incorporating flow pressure sensor data for improved performance. A high-fidelity CFD environment simulated realistic turbulent currents, reef obstacles, and dynamic conditions. With the Soft Actor Critic (SAC) algorithm, two RL agents were trained: one equipped with standard position-velocity feedback and another augmented with pressure-based hydrodynamic force feedback. Performance metrics included episode length, cumulative reward, and final position/heading error, with statistical tests assessing significance. Results indicated that while both agents successfully navigated turbulence, the pressure-augmented agent demonstrated superior performance, consistently achieving longer episode durations and higher rewards, indicative of faster convergence and increased stability. In rigorous tests, this agent significantly outperformed the baseline, maintaining near-zero steady-state position errors (~0.02±0.01 m compared to 0.20±0.05 m for the baseline, p<0.05) and smaller heading deviations. Integrating RL with CFD facilitated effective AUV navigation in complex flows, with pressure feedback enhancing control, precision, and robustness. This approach could lead to safer, more efficient AUV operations in challenging marine environments.

References

[1] E. Anderlini, S. Husain, G. G. Parker, M. Abusara, and G. Thomas, “Towards real-time reinforcement learning control of a wave energy converter,” Journal of Marine Science and Engineering, Vol.8, Issue.11, pp.845–860, 2020.

[2] A. B. Bayezit, “A generalized deep reinforcement learning-based controller for heading keeping in waves,” M.S. thesis, Istanbul Technical University, 2022.

[3] S. Burmester, G. Vaz, and O. el Moctar, “Towards credible CFD simulations for floating offshore wind turbines,” Ocean Engineering, Vol.209, pp.107237, 2020.

[4] E. A. D’Asaro and G. T. Dairiki, “Turbulence intensity measurements in a wind-driven mixed layer,” Journal of Physical Oceanography, Vol.27, Issue.9, pp.2009–2022, 1997.

[5] D. Fan, L. Yang, Z. Wang, M. S. Triantafyllou, and G. E. Karniadakis, “Reinforcement learning for bluff body active flow control in experiments and simulations,” Proceedings of the National Academy of Sciences, Vol.117, Issue.42, pp.26091–26098, 2020.

[6] T. I. Fossen, Handbook of Marine Craft Hydrodynamics and Motion Control, Wiley, pp.1–550, 2011.

[7] B. Gaudet, R. Linares, and R. Furfaro, “Deep reinforcement learning for six-degree-of-freedom planetary landing,” Advances in Space Research, Vol.65, Issue.7, pp.1723–1741, 2020.

[8] P. Gunnarson, I. Mandralis, G. Novati, P. Koumoutsakos, and J. O. Dabiri, “Learning efficient navigation in vortical flow fields,” Nature Communications, Vol.12, pp.1–7, 2021.

[9] J. Woo, C. Yu, and N. Kim, “Deep reinforcement learning-based controller for path following of an unmanned surface vehicle,” Ocean Engineering, Vol.183, pp.155–166, 2019.

[10] Y. Zheng et al., “Soft actor–critic based active disturbance rejection path following control for unmanned surface vessel under wind and wave disturbances,” Ocean Engineering, Vol.247, pp.110631, 2022.

[11] Shiv Sharma and Leena Gupta, “A Novel Approach for Cloud Computing Environment,” International Journal of Computer Engineering, Vol.4, Issue.12, pp.1–5, 2014.

[12] P. Kumar and R. Patel, “AI Integration with Physics-Informed Modelling in Engineering,” International Journal of Computer Sciences and Engineering, Vol.11, Issue.9, pp.45–52, 2023.

[13] L. Andersson, M. Huang, and J. Peters, “CFD-Guided Reinforcement Learning for Aerodynamic Flow Control,” Journal of Fluid Mechanics, Vol.950, pp.112–130, 2022.

[14] R. Mehta and S. Banerjee, “Advances in Autonomous Marine Robotics,” International Journal of Computer Sciences and Engineering, Vol.12, Issue.10, pp.33–40, 2023.

[15] V. Arora and A. K. Sharma, “Underwater Sensor Networks for Environmental Monitoring,” International Journal of Computer Sciences and Engineering, Vol.12, Issue.7, pp.21–28, 2022.

[16] P. Singh and R. Gupta, “Data-Driven Control Strategies for Robotic Systems,” International Journal of Computer Sciences and Engineering, Vol.11, Issue.12, pp.14–22, 2021.

[17] S. Park and Y. Kim, “Sensor Fusion Techniques in Marine Robotics,” International Journal of Computer Sciences and Engineering, Vol.13, Issue.2, pp.55–62, 2024.

[18] H. Liao and R. Sun, “CFD-Enhanced Machine Learning for Autonomous Vehicles,” IEEE Transactions on Robotics, Vol.39, Issue.3, pp.512–525, 2023.

[19] G. Thomas and M. Verma, “Hybrid AI-Control Systems for Underwater Applications,” International Journal of Computer Sciences and Engineering, Vol.12, Issue.8, pp.29–38, 2022.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution 4.0 International License.

Authors contributing to this journal agree to publish their articles under the Creative Commons Attribution 4.0 International License, allowing third parties to share their work (copy, distribute, transmit) and to adapt it, under the condition that the authors are given credit and that in the event of reuse or distribution, the terms of this license are made clear.