Advancements in AI-Based Compiler Optimization Techniques for Machine Learning Workloads

DOI:

https://doi.org/10.26438/ijcse/v13i3.7077Keywords:

AI-based compilers, reinforcement learning, neural architecture search, machine learning, compiler optimizationAbstract

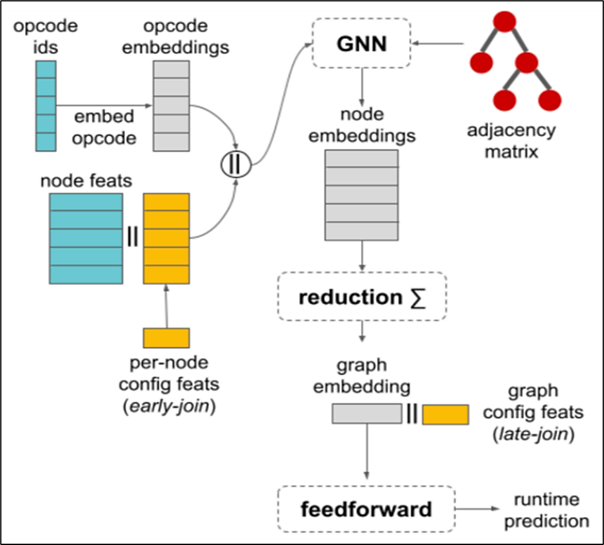

This paper primarily explores the application of AI-driven compiler optimization techniques for machine learning (ML) workloads, with a focus on reinforcement learning and neural architecture search. It examines the performance of traditional compilers compared to AI-optimized compilers leveraging various ML models, including CNNs, RNNs, FNNs, and transformers. The results indicate that AI-driven compilers — particularly those using a hybrid RL + NAS approach—outperforms traditional compilers in energy consumption, memory usage, execution time and hardware utilization. Additionally, the findings suggest that AI-based optimization techniques can streamline ML pipeline development, enhancing efficiency and performance for both resource-constrained environments and large-scale applications.

References

[1] M. Sponner, B. Waschneck, and A. Kumar, “AI-driven performance modeling for AI inference workloads,” Electronics, Vol.11, No.15, pp.2316, 2022. DOI: 10.3390/electronics11152316.

[2] M. K. Sheikh, “A Machine Learning Based Compiler Optimization Technique,” Sukkur IBA Journal of Emerging Technologies, Vol.7, No.1, pp.37-47, 2024.

[3] M. Trofin, Y. Qian, E. Brevdo, Z. Lin, K. Choromanski, and D. Li, "MLGO: A Machine Learning Guided Compiler Optimizations Framework," arXiv preprint, arXiv:2101.04808, 2021.

[4] C. Metz, “Towards Sustainable Artificial Intelligence Systems: Enhanced System Design with Machine Learning-Based Design Techniques,” Ph.D. dissertation, Universität Bremen, Germany, 2024.

[5] A. N. Mazumder, J. Meng, H. A. Rashid, U. Kallakuri, X. Zhang, J. S. Seo, and T. Mohsenin, “A survey on the optimization of neural network accelerators for micro-ai on-device inference,” IEEE Journal on Emerging and Selected Topics in Circuits and Systems, Vol.11, No.4, pp.532-547, 2021. DOI: 10.1109/JETCAS.2021.3120032.

[6] F. Ponzina, “Hardware-Software Co-Design Methodologies for Edge AI Optimization,” Ph.D. dissertation, EPFL, Switzerland, 2023.

[7] P. Gonzalez-Guerrero, A. Butko, G. Michelogianniakis, and J. Shalf, “AI-Enabled Analysis and Control for Enhancing Data Transition and Movement,” In Position Papers for the ASCR Workshop on Reimagining Codesign, March 2021.

[8] H. Bouzidi, “Efficient Deployment of Deep Neural Networks on Hardware Devices for Edge AI,” Ph.D. dissertation, Université Polytechnique Hauts-de-France, France, 2024.

[9] I. Hidalgo, F. Fenández-de Vega, J. Ceberio, O. Garnica, J. M. Velasco, J. C. Cortés, R. Villanueva, and J. Díaz, “Sustainable Artificial Intelligence Systems: An Energy Efficiency Approach,” [Preprint - Not Accepted for Final Publication], Authorea Preprints, 2023.

[10] K.K. Balasubramanian, M. Di Salvo, W. Rocchia, S. Decherchi, and M. Crepaldi, “Designing RISC-V Instruction Set Extensions for Artificial Neural Networks: An LLVM Compiler-Driven Perspective,” IEEE Access, 2024. DOI: 10.1109/ACCESS.2024.3290706.

[11] Ashouri, A. H., Manzoor, M. A., Vu, D. M., Zhang, R., Wang, Z., Zhang, A., ... & Gao, Y., “ACPO: AI-Enabled Compiler-Driven Program Optimization,” arXiv preprint arXiv:2312.09982, 2023.

[12] J. A. H. Klein, “Exploring High-Performance and Energy-Efficient Architectures for Edge AI-Enabled Applications,” Ph.D. dissertation, EPFL, Switzerland, 2024.

[13] S. S. Gill, M. Golec, J. Hu, M. Xu, J. Du, H. Wu, G. K. Walia, S. S. Murugesan, B. Ali, M. Kumar, and K. Ye, “Edge AI: A taxonomy, systematic review and future directions,” Cluster Computing, Vol.28, No.1, pp.1-53, 2025. DOI: 10.1007/s10586-024-04057-9.

[14] E. Kakoulli, “Latest Innovations in Intelligent Network-on-Chip Architectures: A Systematic Review,” 2024 17th IEEE/ACM International Workshop on Network on Chip Architectures (NoCArc), IEEE, Nov., pp.1-6, 2024.

[15] Wang, H., Tang, Z., Zhang, C., Zhao, J., Cummins, C., Leather, H., & Wang, Z., “Automating Reinforcement Learning Architecture Design for Code Optimization,” in Proceedings of the 31st ACM SIGPLAN International Conference on Compiler Construction, Mar., pp.129-143, 2022.

[16] Mammadli, R., Jannesari, A., & Wolf, F., “Static Neural Compiler Optimization via Deep Reinforcement Learning,” in 2020 IEEE/ACM 6th Workshop on the LLVM Compiler Infrastructure in HPC (LLVM-HPC) and Workshop on Hierarchical Parallelism for Exascale Computing (HiPar), Nov., pp.1-11, 2020.

[17] D. Alsadie, “A comprehensive review of AI techniques for resource management in fog computing: Trends, challenges and future directions,” IEEE Access, 2024. DOI: 10.1109/ACCESS.2024.3284783.

[18] V. Shankar, “Edge AI: A Comprehensive Survey of Technologies, Applications, and Challenges,” 2024 1st International Conference on Advanced Computing and Emerging Technologies (ACET), IEEE, Ghaziabad, India, pp.1-6, 2024. DOI: 10.1109/ACET61898.2024.10730112.

[19] Ashouri, A. H., Manzoor, M. A., Vu, D. M., Zhang, R., Wang, Z., Zhang, A., ... & Gao, Y., “ACPO: AI-Enabled Compiler-Driven Program Optimization,” arXiv preprint arXiv:2312.09982, 2023.

[20] V. Shankar, M. M. Deshpande, N. Chaitra, and S. Aditi, “Automatic detection of acute lymphoblastic leukemia using image processing,” 2016 IEEE International Conference on Advances in Computer Applications (ICACA), IEEE, Coimbatore, India, pp.186-189, 2016. DOI: 10.1109/ICACA.2016.7887948.

[21] Zhu, S., Yu, T., Xu, T., Chen, H., Dustdar, S., Gigan, S., ... & Pan, Y., “Intelligent Computing: The Latest Advances, Challenges, and Future,” Intelligent Computing, Vol.2, pp.0006, 2023.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution 4.0 International License.

Authors contributing to this journal agree to publish their articles under the Creative Commons Attribution 4.0 International License, allowing third parties to share their work (copy, distribute, transmit) and to adapt it, under the condition that the authors are given credit and that in the event of reuse or distribution, the terms of this license are made clear.