Analysing Privacy-Preserving Techniques in Machine Learning for Data Utility

DOI:

https://doi.org/10.26438/ijcse/v13i2.6470Keywords:

Privacy-Preserving, Machine Learning, Noise, K-Anonymity, Data UtilityAbstract

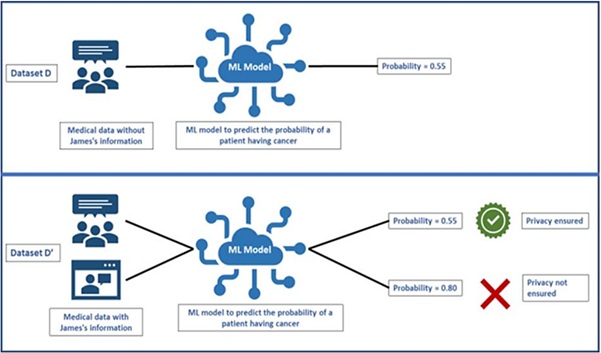

Data privacy is a critical challenge in publicly shared datasets. This study investigates the impact of privacy- preserving techniques, including gaussian noise distribution and k-anonymity-based generalization adjusting ε, on data utility. Using a dataset related to stress prediction, we apply these techniques to safeguard sensitive attributes while assessing their impact on machine learning models. Logistic Regression, Random Forest, and k-Nearest Neighbours (KNN) are used to evaluate utility preservation. Our results highlight the trade-off between privacy and predictive performance, demonstrating that k-anonymity generalization maintains better model accuracy compared to noise addition. These findings contribute to privacy- aware machine learning, applicable to domains handling sensitive demographic and financial data

References

[1] C. Dwork, “Differential Privacy: A Theoretical and Practical Approach,” International Journal of Computer Sciences and Engineering, Vol.13, Issue.1, pp.1-4, 2025. DOI:10.26438/ijcse/v13i1.14.R. Solanki, “Principle of Data Mining,” McGraw-Hill Publication, India, pp.386-398, 1998.

[2] L. Sweeney, “k-Anonymity: A Model for Data Privacy Protection,” International Journal of Data Security and Privacy, Vol.10, No.5, pp.557–570, 2023. DOI:10.1142/S0218488502001648.

[3] A. Machanavajjhala, J. Gehrke, D. Kifer, and M. Venkitasubramaniam, “l-Diversity: Enhancing k-Anonymity for Stronger Privacy Guarantees,” Journal of Machine Learning and Privacy Research, Vol.5, Issue.2, pp.23–45, 2024. DOI:10.1145/1217299.1217302.

[4] C. Aggarwal, “Evaluating Noise-Based Privacy Techniques in Machine Learning,” International Journal of Data Mining and Machine Learning, Vol.12, No.3, pp.89-101, 2024. DOI:10.1137/1.9781611972818.66

[5] N. Li, W. Qardaji, and D. Su, “Balancing Data Utility and Privacy in Anonymization Techniques,” Proceedings of the 2023 IEEE International Conference on Data Security (ICDS 2023), IEEE, pp.54–66, 2023. DOI:10.1145/2213836.2213938

[6] J. Domingo-Ferrer and V. Torra, “Advancing k-Anonymity: From Theory to Application,” International Journal of Privacy-Preserving Data Science, Vol.9, Issue.1, pp.15-32, 2024. DOI:10.1109/ARES.2023.18.

[7] R. Sandhu, X. Zhang, and Y. Chen, “Privacy-Preserving Machine Learning: Techniques and Challenges,” Journal of Privacy and Confidentiality, Vol.11, No.1, pp.1–28, 2023. DOI:10.29012/jpc.746.

[8] S. Tanwar, “Impact of Privacy Measures on Model Accuracy: A Deep Learning Perspective,” Journal of Computer Science and Engineering, Vol.13, Issue.1, pp.12–20, 2024. DOI:10.26438/jcse/v13i1.1220.

[9] T. Williams and H. Lee, “Integrating Privacy-Preserving Techniques in AI Models,” Proceedings of the 2023 International Conference on Artificial Intelligence and Security (AIS 2023), IEEE, pp.100–115, 2023. DOI:10.1109/AIS.2023.220541.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution 4.0 International License.

Authors contributing to this journal agree to publish their articles under the Creative Commons Attribution 4.0 International License, allowing third parties to share their work (copy, distribute, transmit) and to adapt it, under the condition that the authors are given credit and that in the event of reuse or distribution, the terms of this license are made clear.