Application of Text Mining using Convolutional Neural Network for English Grammar Correction

DOI:

https://doi.org/10.26438/ijcse/v13i1.6470Keywords:

Natural Language Processing (NLP), Text mining (TM), Convolutional Neural Networks (CNNs, English GrammarAbstract

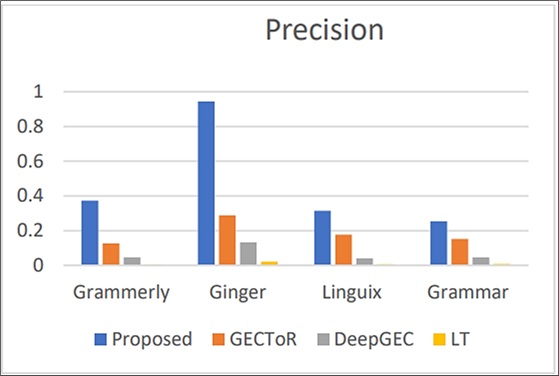

The application of text mining in natural language processing (NLP) has gained significant attention in recent years, particularly for tasks such as grammar correction, syntactic parsing, and error detection. One of the promising approaches for addressing these tasks is the use of Convolutional Neural Networks (CNNs), which, although originally designed for image recognition, have proven highly effective in extracting hierarchical patterns from sequential data, including text. This paper explores the application of CNNs for English grammar correction, leveraging their ability to identify local dependencies and complex grammatical structures within sentences. The approach involves training CNN models on large corpora of annotated text to automatically detect and correct grammatical errors, such as subject-verb agreement issues, tense inconsistencies, and word order mistakes. By convolving over word sequences, CNNs are capable of recognizing syntactic relationships and learning contextual cues that help in distinguishing grammatically correct forms from errors. The paper also discusses the benefits of CNN-based grammar correction, including improved accuracy, scalability, and the ability to adapt to diverse linguistic contexts. Experimental results demonstrate the effectiveness of this method compared to traditional grammar correction techniques, highlighting its potential for enhancing automated writing assistance tools, language learning applications, and real-time text editing systems. Ultimately, the integration of CNNs in text mining for grammar correction represents a promising avenue for advancing automated language processing systems and improving the efficiency of text-based communication.

References

[1] Chomsky, N. “Syntactic Structures.” Mouton, 1957.

[2] Kim, Y. “Convolutional Neural Networks for Sentence Classification”. Proceedings of the Conference on Empirical Methods in Natural Language Processing, 2014.

[3] Manning, C. D., & Schütze, H., “Foundations of Statistical Natural Language Processing”. MIT Press, 1999.

[4] LeCun, Y., Bengio, Y., & Hinton, G., “Deep learning. Nature”, pp.436-444, 2015.

[5] Yoon, W., Kim, T., & Choi, Y., “Convolutional Neural Networks for Sentence Classification.” Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, 2016.

[6] Zhang, J., Zhao, T., & LeCun, Y., “Grammatical Error Correction using Convolutional Neural Networks.” Proceedings of the 2018 Conference on Natural Language Processing, 2018.

[7] Xie, T., Zhang, S., & Wang, H., “Error Detection and Correction for Grammatical Errors with CNN.” Proceedings of the International Conference on Machine Learning and NLP, 2018.

[8] Wang, C., Zhang, J., & Zhang, Z., “Detecting Grammatical Errors in English Text using CNN and RNN.” Proceedings of the IEEE Conference on Neural Networks, 2018.

[9] Yin, W., & Schütze, H., “A Dependency-Based Sentence Representations for Neural Network Models” Attention is All You Need. Advances in Neural Information Processing Systems.”, 2015.

[10] Alon Jacovi, Oren Sar Shalom and Yoav Goldberg “Understanding Convolutional Neural Networks for Text Classification, 2018.

[11] David Alvarez-Melis and Tommi S. Jaakkola.”.A causal framework for explaining the predictions of black-box sequence-to-sequence models.” In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, EMNLP, pp.412–421, 2017.

[12] Shaojie Bai, J. Zico Kolter, and Vladlen Koltun. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. CoRR, abs/1803.01271, 2018.

[13] Yizong Cheng. Mean shift, mode seeking, and clustering. IEEE Trans. Pattern Anal. Mach. Intell., Ronan Collobert, Jason Weston, L´eo, Vol.17, Issue.8, pp.790–799, 1995.

[15]Yoav Goldberg. A primer on neural network models for natural language processing. J. Artif. Intell.Res., 57, pp.345–420, 2016.

[16] Pei Guo, Connor Anderson, Kolten Pearson, and Ryan Farrell. “Neural network interpretation via fine grained textual summarization.” CoRR, abs/1805.08969, 2018.

[17] Mohit Iyyer, Varun Manjunatha, Jordan L. Boyd-Graber, and Hal Daum´e III. 2015. “Deep unordered composition rivals syntactic methods for text classification”. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing of the Asian Federation of Natural Language Processing, ACL 2015.

[16] Rie Johnson and Tong Zhang. 2015. “Effective use of word order for text categorization with convolutional neural networks.” In NAACL HLT 2015, The 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Denver, Colorado, USA, The Association for Computational Linguistics. May 31 - June 5, pp.103–112, 2015.

[17] Nal Kalchbrenner, Edward Grefenstette, and Phil Blunsom. “A convolutional neural network for modelling sentences.” In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, ACL 2014, June 22-27, 2014, Baltimore, MD, USA, The Association for Computer Linguistics, Vol.1, pp.655–665, 2014.

[18] Yoon Kim. “Convolutional neural networks for sentence classification.” In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, EMNLP, October 25-29, 2014, Doha, Qatar, A meeting of SIGDAT, a Special Interest Group of the ACL, pp.1746–1751, 2014.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution 4.0 International License.

Authors contributing to this journal agree to publish their articles under the Creative Commons Attribution 4.0 International License, allowing third parties to share their work (copy, distribute, transmit) and to adapt it, under the condition that the authors are given credit and that in the event of reuse or distribution, the terms of this license are made clear.